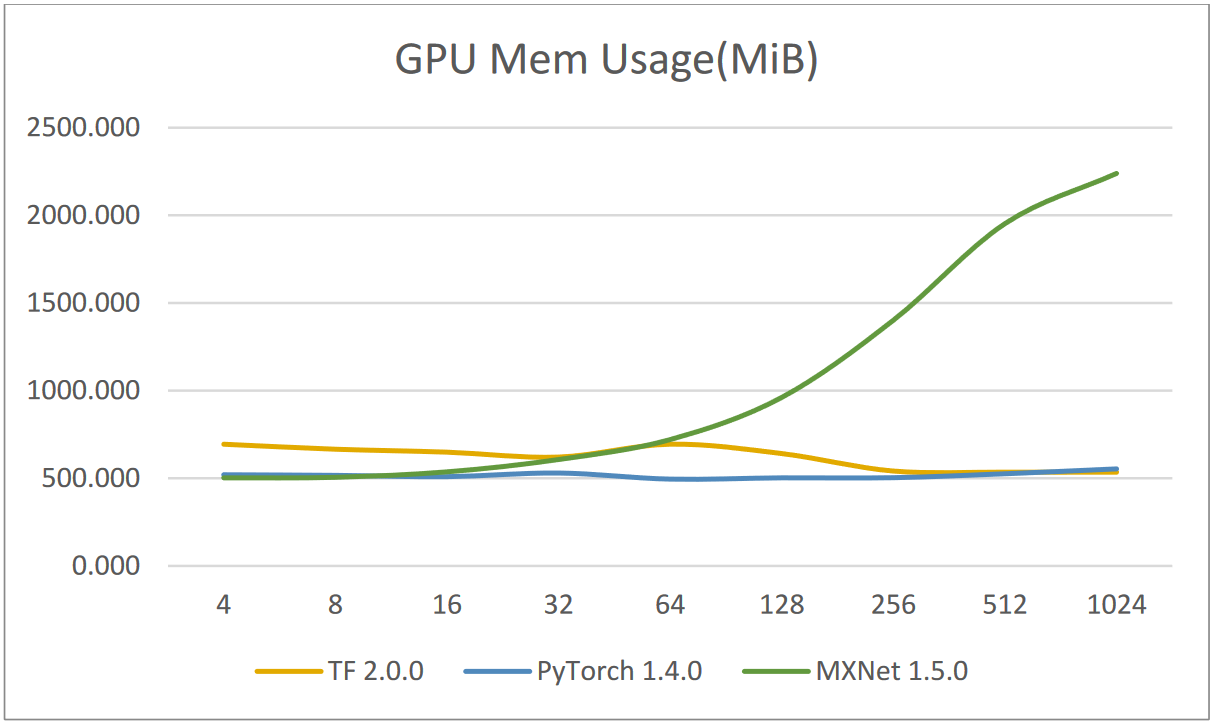

GPU memory usage as a function of batch size at inference time [2D,... | Download Scientific Diagram

GPU Memory Size and Deep Learning Performance (batch size) 12GB vs 32GB -- 1080Ti vs Titan V vs GV100

Performance and Memory Trade-offs of Deep Learning Object Detection in Fast Streaming High-Definition Images

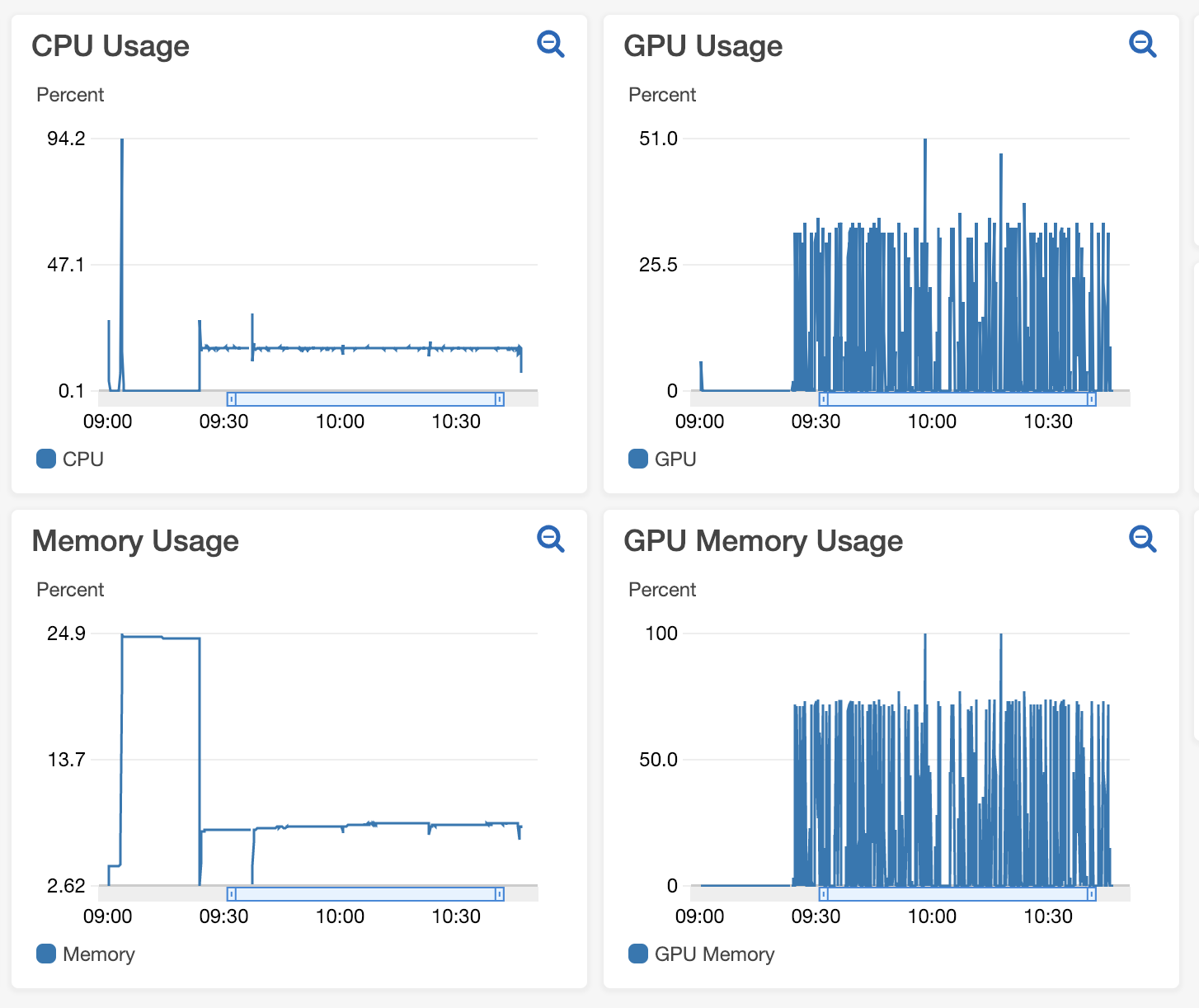

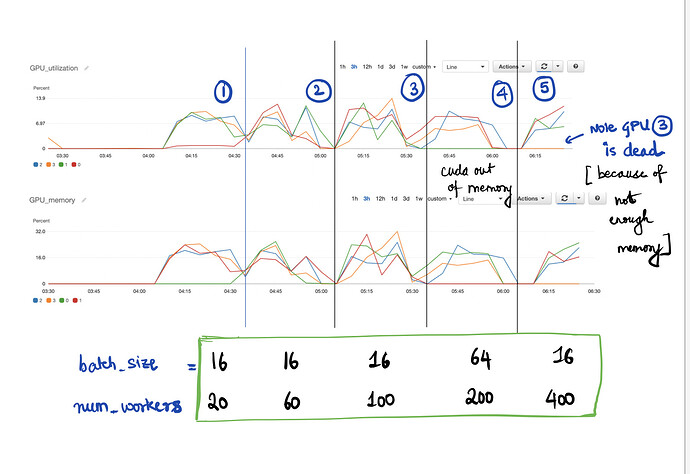

Identifying training bottlenecks and system resource under-utilization with Amazon SageMaker Debugger | AWS Machine Learning Blog

deep learning - Effect of batch size and number of GPUs on model accuracy - Artificial Intelligence Stack Exchange

GPU memory usage as a function of batch size at inference time [2D,... | Download Scientific Diagram

![Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub](https://user-images.githubusercontent.com/15141326/33256270-a3795912-d351-11e7-83e4-ea941ba95dd5.png)

Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub

Effect of the batch size with the BIG model. All trained on a single GPU. | Download Scientific Diagram

pytorch - Why tensorflow GPU memory usage decreasing when I increasing the batch size? - Stack Overflow